Welcome to Polymathic Being, a place to explore counterintuitive insights across multiple domains. These essays take common topics and investigate them from different perspectives and disciplines to come up with unique insights and solutions.

Today's topic takes a different look at our brain, how it operates, and what this means for us in work, life, and play. Hang tight as we explore the things we see and don’t see, and the fictions we create to make sense of the world around us. Don’t worry though, at the end, you’ll also have some tools on hand to check your blind spots and reframe your reality to ensure a solid foundation.

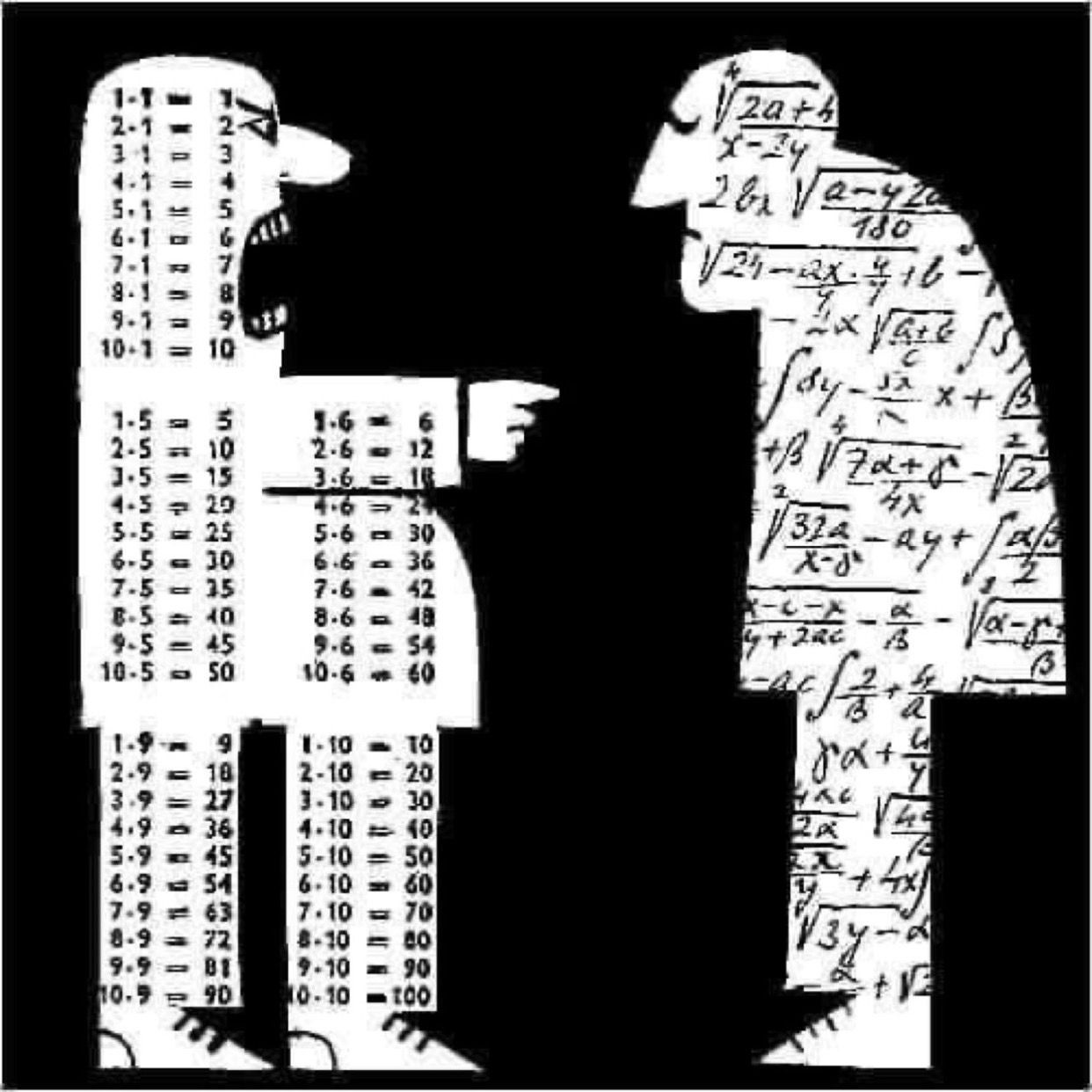

Throughout this essay are optical illusions to enjoy. Many work because of the blind spot in our eyes due to the optic nerve that our brain ‘smooths’ over. Others work because our brain forces a contextualization that does not exist in the image. These illuminate how what we see is being filtered through our own perceptions.

Cognitive Blindness. We all suffer from it. It doesn't matter how smart or educated you are, it’s programmed deep into our brains for a variety of important reasons that we’ll get into. While you can’t prevent it, what you can do is train yourself to recognize it and adjust to compensate for it.

What is cognitive blindness? It’s the inability to see something, such as an idea, position, opinion, or fact, that contradicts one of our accepted realities. This is what is currently so divisive in politics. In a gross generality, the left cannot see the side of the right (other than their characterization) and the right cannot see the side of the left (other than their characterization) because to do so would be to contradict elements of your own position.

As you may have guessed, the title of this essay alludes to the Game of Thrones series where the character Jon Snow was so blinded by his perceived reality, shaped by his cultural and personal biases that he could not see the truth in front of him. The Wilding character Ygritte loved to point out this blindness out by chiding:

“You know nothing Jon Snow!”

This blindness goes much further and deeper than politics. The NPR show Hidden Brain recently aired an episode titled "How Your Beliefs Shape Reality" which uncovered that our cultural biases are not just passive reflections of reality, but active creators of reality. They influence how we perceive, interpret, and respond to events and situations. Cognitive blindness and our other biases are further shaped by our social environment, our personal experiences, our emotions, and our motivations. The resulting ‘reality’ can have positive or negative effects on our well-being, our relationships, our performance, and our physical and mental health.

discusses the issue with these cognitive biases in relation to the endemic depression and anxiety of young women today by explaining:In [Cognitive Behavioral Therapy] you learn to recognize when your ruminations and automatic thinking patterns exemplify one or more of about a dozen “cognitive distortions,” such as catastrophizing, black-and-white thinking, fortune telling, or emotional reasoning. Thinking in these ways causes depression, as well as being a symptom of depression. Breaking out of these painful distortions is a cure for depression.

The creative team at Pixar captured all this in the fantastic movie Inside Out. While working on the neuroscience behind the film, they found out that only 40% of what we see comes in through our eyes, with the rest created from memories or patterns we have from experience and bias due to two main jobs our brain is designed to perform.

“All models are wrong, some are useful”

- George Box

The Brain’s Job Description

As we covered in the essay The Con[of]Text, our brain has two job duties:

Make sense of the world around us.

Be computationally efficient.

Job 1

The first line in our brain’s job description is to make sense of the world around us. The anatomy of our brains varies little from person to person. Yet no two people perceive the world exactly the same. Variation in human perception is a function of cognitive, affective, and memory processes that imprint other patterns on top of sensory information on its way to perception, and ultimately behavior. As elegantly described in the book Autobiography of God: Biopsy of A Cognitive Reality:

“[E]ach of our brains creates its own myth about the universe.”

This sense-making in our brains also leaves its impact on cultures over time that can affect even the very concept of time. For instance, in the west, we view time as linear with the future in front of us and the past behind us. Eastern cultures, like in Iraq and the Hebrew culture that influenced the Bible, view time as cyclical, and even more interesting, as facing backward. They view the past as the only thing known because you can see it, and the future, behind you, is unknown. With this view, you can only adapt to the future by understanding the patterns of the past. When I was deployed to Iraq, and I’d ask a local leader when something would be done, the inevitable answer was “Inshallah” or “If God Wills” because only God can know the future.

We should note that there is cognitive blindness even in retrospect. The adage “Hindsight is 20/20” isn’t true. Author Ed Catmull in the book Creativity Inc. shares:

“Our view of the past, in fact, is hardly clearer than our view of the future. While we know more about a past event than a future one, our understanding of the factors that shaped it is severely limited. Not only that, because we think we see what happened clearly…we often aren’t open to knowing more.”

Our brains work to make sense of the world around us, and in doing so create patterns in culture and thought that provide a reference structure. These patterns rely on biases that exist because there is simply too much information to process and thus, we get to the second job function of our brains.

Job 2:

The second line in our brain’s job description is to be efficient. We can’t see all, hear all, or know all and so our brain has hundreds of shortcuts to reduce the cognitive burden. These shortcuts, or biases, are used to provide cognitive smoothing and efficient data processing. Examples of bias that impact cognitive blindness include:

Negativity bias or Negativity effect: Psychological phenomenon where we have greater recall of unpleasant compared with positive memories of an event

Confirmation bias: The tendency to search for, interpret, focus on, and remember information in a way that confirms our preconceptions

Anchoring or focalism: The tendency to rely on, or anchor to one trait or piece of information in decision-making (often the first piece of information acquired)

Fundamental attribution error: The tendency for people to overemphasize personality-based explanations for behaviors observed in others while underemphasizing the role and power of situational influences

These biases can be referred to as Heuristics or ‘Rules of Thumb’ which provide decisions that are often correct, or correct enough, for that situation. This cognitive smoothing can also make individuals completely ambivalent about the gaps or inconsistencies that necessitated the smoothing by actually removing them from consciousness altogether. Your brain literally blinds itself to the inconsistencies.

In the essay on Stereotyping Properly, we explained that stereotypes exist for the same reason we have 200 other named biases working in our brains. First off, we can’t compute every possible permutation and second, we don’t have the time to spend trying to identify a friend or foe. A stereotype is simply a measurement heuristic based on population averages.

Biases are critical to helping the brain process vast quantities of data accurately and quickly. Biases are problematic when they are not understood or recognized in action and therefore not adjusted. In this manner, they can become blind spots that can manipulate emotion and reason. Jonathan Swift identifies the paradigm well in that:

“Reasoning will never make a man correct an ill opinion, which by reasoning he never acquired.”

There are two cognitive blindnesses that I’d like to highlight for their pernicious impact on our conversations. Both are the manifestation of multiple other biases and therefore important to address. The first is the Dunning-Kruger Effect, and the second is Cognitive Dissonance.

The Dunning-Kruger Effect

I want to start off on this one by clarifying that many people misinterpret the Dunning-Kruger Effect as being a lack of intelligence, but this is incorrect. It is more about low ability, expertise, or experience regarding a certain type of task or area of knowledge. It’s not discussing unintelligent people, but the specific overconfidence of people unskilled at a particular task. Smart, even (and maybe especially) those considered experts, asked to work outside of their skills, suffer as equally or more as anyone else, not from a lack of intelligence, but of experience and information.

The Dunning-Kruger Effect is where we apply biases on limited data and draw wrong conclusions and make wrong decisions. However, because most of us have very limited impact writ large, these mistakes often don’t result in a re-learning cycle because there is a low cost to being wrong. This results in a lack of feedback from mistakes made and leads to a belief in your own correctness and, consequently, to increased confidence in your own decisions.

It can often lead to a feeling of superiority and a feedback loop that justifies the whole mess. We all deal this in ourselves where, when faced with a situation that is new, or doesn’t have much data, we don’t see the complexity and are more confident that our answer is right. Conversely, those who have more data, and see the additional patterns, tend to doubt themselves and their abilities. Charles Darwin captured this as:

"Ignorance breeds confidence more often than knowledge"

and Bertrand Russell:

"It is one of the unfortunate things of our time that those who are confident are stupid, and those who have imagination or understanding are full of doubt and indecision."

Again, your level of education, or level of expertise in one area, or your position in a hierarchy, can actually have reinforcing effects on this blindness. The ignorance and stupidity stated in those quotes are as often due to overconfidence in your position and the loss of curiosity about new information.

Nasim Talib captures this well in the book Skin in the Game, where he describes the hubris and confidence that so many highly educated people have in “the intelligentsia” largely due to them never suffering any of the consequences of the policies they support. Thomas Sowell also discusses this quandary in Basic Economics where he demonstrates that the policies implemented to alleviate poverty often have a negative effect on the poor yet have no effect on the policymakers. They remain blissfully blind and over-confident as there was no cost to them being wrong.

Luckily there is a pretty simple solution for this that we explored in Systems Thinking where we approach the problem with an insatiable curiosity and add in a healthy dose of humility by accepting that we don’t know as much about the overall system as we’d like to think. With that curiosity, we can intentionally reframe the problem to see if it shifts with a change of perspective, indicating there is more to know. In this way, we can address the lack of data, not with confidence in what we think we know, but with curiosity to see if we actually know enough.

Cognitive Dissonance

Cognitive dissonance is the mental discomfort, or psychological stress, experienced by a person who simultaneously holds two or more contradictory beliefs, ideas, or values. This discomfort is triggered by a situation in which a belief we hold clashes with new evidence that contradicts our personal beliefs, ideals, and values. Our brain is insanely efficient at resolving the contradiction in order to reduce discomfort.

It's like two magnets repelling each other with the new facts as one magnet, and your perceived reality as the other. This typically results in us appearing to literally 'glitch' in the conversation just like the magnets spin away from each other. It can be seen as ignoring the point, changing the topic (whataboutism) or quickly devolving into ad-hominem attacks on the person presenting the information. Our confirmation and negativity biases are primed to protect our cognitive reality and our brain creates defensive mechanisms that are predisposed to reject the actual truth.

The first step in addressing this blindness is to recognize the feeling of cognitive dissonance. I notice it in a tightening in my solar plexus, ‘my gut.’ It’s a righteous indignation, a feeling of panic, a desire to react. I find myself racing to the keyboard to search for topics to confirm my bias. I feel the desire to attack, or retreat. I feel vulnerable. Bottom line, it’s uncomfortable. My brain is trying to smooth over the situation and I’m ‘glitching.’ Those magnets just don’t want to touch.

Once we recognize it in ourselves, we can step back and pause. This also means stepping back and pausing when you notice it is happening with someone else. We all want to be right, but you’ll rarely win against the brain’s dissonance protocols. In ourselves, it helps if you have a foundation that prepares you to be ready to question and challenge your own positions. This doesn't mean you have to give up on your position, and it doesn’t mean you can’t hold contradictory positions. I hold contradictory positions all the time because I don't find validity in the other options, and I don’t yet have enough information to justify rejecting one or the other. They also work, by and large. The key is to understand that you are holding them, and why.

Summary

I find myself dealing with cognitive blindness on a regular basis, mostly because I’m looking for it in myself. Often, we see it in others and criticize their blindness, but rarely look into ourselves and start there. If anything, that’s the one thing I hope you take from this essay; the introspection to see where you have blind spots, and how to approach resolving them.

There is no easy solution to dealing with cognitive blindness in others. You can only work on it in yourself, but once you learn that, you can then have grace for others’ reactions. Dealing with another person whose reality is being challenged and is blinded cognitively requires demonstrating, with empathy, the counterpoints, avoiding personal attacks, and as Kenny Rogers would say:

“You got to know when to hold 'em

Know when to fold 'em

Know when to walk away

And know when to run”

Systems thinking is a great start to ensure you are viewing things from multiple perspectives and our favorite core of Polymathic Being is the challenge to Learn, Unlearn, and Relearn so we don’t become ossified into a static reality. By actively acknowledging and searching for our cognitive blindness, and then adapting our models to be better, we can continually update our brain’s interpretive frameworks. This helps us cope with stress, overcome obstacles, achieve goals, and improve our happiness overall.

Enjoyed this post? Hit the ❤️ button above or below because it helps more people discover Substacks like this one and that’s a great thing. Also please share here or in your network to help us grow.

Polymathic Being is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Not only must we consider what we "know", but what we "observe". I'm pasting the first few paragraphs of Philip Morrison's 1982 essay from his book "Powers of Ten". It's not online, but I transcribes these thoughts here for us to continue to chew on. It's wonderful reading, thinking about the six-orders-of-magnitude world which we observe. And, or course, what lays beyond.

“Looking at the world: An Essay” Philip Morrison, 1982

Of all our senses it is vision that most informs the mind. We are versatile diurnal primates with a big visual cortex; we use sunlit color in constant examination of the bright world, though we also can watch by night. Our nocturnal primate cousins mostly remain high in the trees of the forest, patiently hunting insects in the darkness.

It is no great wonder that the instruments of science also favor vision; but they extend it far into new domains of scale, of intensity, and of color. Inaudible and invisible man-made signals now fill every ordinary living room, easily revealed in all their artifice to ear and eye by that not-so-simple instrument, the radio, and the even more complicated TV set. It is very much this path of novelty that science has followed into sensory domains beyond any direct biological perception. There, complex instruments assemble partial images of the three-dimensional space in which we dwell, images rich and detailed although at scales outside the physical limits of visible light.

The images finely perceived by eye and brain in a sense span the scientific knowledge of our times (though it is risky co neglect the hand). The world is displayed by our science in diverse ways, by manifold instruments and by elaborate theories that no single person can claim any longer co master in all detail. The presentation of the whole world we know as though it were a real scene before the eyes remains an attractive goal. It should be evident chat no such assemblage could be complete, no picture could be final, nor could any image plumb the depths of what we have come to surmise or to understand. Behind every representation stands much more than can be imaged, including concepts of a subtle and often perplexing kind. Yet it is prob-ably true-truer than the specialists might be willing to admit-that the linked conceptual structures of science are not more central to an overall understanding than the visual models we can prepare.

The Gamut of the Sciences

The world at arm’s length – roughly one meter in scale – is the world of most artifacts and of the most familiar of living forms. No single building crosses the kilometer scale; no massive architecture, from pyramid to Pentagon, is so large. A similar limit applies to living forms: The giant trees hardly reach a hundred meters in height, and no animals are or have ever been found that large. The smallest artifacts we can use and directly appreciate – the elegant letters in some fine manuscript, or the polished eye of a fine needle – perhaps go down to a few tenths of a millimeter. Six orders of magnitude cover the domain of familiarity. Science conducted at these scales is rather implicit: The most salient disciplines are those that address the roots of human behavior.

I love the clarification of the Dunning Kruger Effect. I remember watching someone clearly suffering from it, yet ridiculing less educated people who pushed back on his wrong ideas as the ones actually suffering because HE was a Doctor. More often than not, the experts do suffer from it worse than others, especially outside their fields. Sam Harris is a great example that I can think of. He's trying to be the expert on everything but he has no curiosity outside of his own myopia.