Welcome to Polymathic Being, a place to explore counterintuitive insights across multiple domains. These essays take common topics and investigate them from different perspectives and disciplines to come up with unique insights and solutions.

Today's topic looks at Artificial Intelligence (AI) from a systems perspective and strives to understand the layers, the relationships, and the implications of this incredibly broad topic. Doing so helps us understand where we are and to better identify the risks of moving forward or stepping back from the current rates of development.

Introduction

Artificial Intelligence (AI) is constantly in the news and on social media. But what is it really? Simply put, AI is the development of machines with the ability to think and act like humans, using algorithms and data to solve problems that would otherwise require human intelligence. It has been used in a wide range of industries, from healthcare to finance, to improve efficiency and accuracy. To hear tell, it will either result in a jobocalypse destroying work as we know it or foster the next transformational leap in human advancement. But missing between these two polemics is an understanding of what AI actually is, the layers of complexity that underpin it, and just how embedded it is in much of our lives today.

What is AI?

Artificial Intelligence (AI) refers to the ability of machines to perform tasks that would normally require human intelligence, such as understanding natural language, recognizing objects in images, and making decisions. It is a broad field of study that encompasses many different techniques and technologies, including machine learning, natural language processing, computer vision, and decision-making.

The ‘Father’ of AI, Alan Turing set the stage for much of how we conceptualize AI. He suggested that machines were naturally limited to purely mathematic, ie algorithmic computations, and therefore restricted by Gödel’s Incompleteness Theorems.

Gödel was an Austrian logician in the early twentieth century who proposed that any consistent formal system that is strong enough to express basic arithmetic, will always have statements that are true but cannot be proven within the system. Like the classic one plus one equals two had been exceptionally difficult to attempt a proof on. One attempt took over three-hundred and sixty pages and it was still questioned.

The second theorem Gödel derived was that a formal system cannot prove its own consistency. While this might not seem to matter much outside of mathematics. Alan Turing believed that while machines were bound by Gödel’s incompleteness theorems, humans were not.

This was the foundation for his ‘Turing Test’; a litmus test that has been used for decades to measure Artificial Intelligence. Namely that it had to convincingly imitate human-like responses in a conversation.

What this led to was a bifurcation into two main categories: weak AI and strong AI. Weak AI refers to systems that are designed to perform specific tasks, such as image recognition or speech recognition. These systems are not truly intelligent and are only capable of completing the tasks they were specifically designed to do. Strong AI, on the other hand, refers to systems that are capable of general intelligence, meaning they can perform any intellectual task that a human can. I.E., passing the Turing Test.

Stepping back for context

AI is currently being used in a wide range of applications, including self-driving cars, virtual assistants, personalization, fraud detection, and medical diagnosis. We already appreciate the benefits of these systems. The challenge is to recognize the overall goal what we call AI in these systems. When stepping back, what emerges is that AI is actually an enabler of a larger technology suite we refer to as Autonomy.

As I captured in the Defense Systems Information Analysis Center (DSIAC) journal paper, Systems Engineering of Autonomy: Frameworks for MUM-T Architecture, autonomy itself is “a gradient capability enabling the separation of human involvement from systems performance.”

What has continually frustrated me is the intentional separation of AI from Autonomy when AI is merely an enabler to achieve autonomy based on complexity. You can have a very unintelligent AI allowing full autonomy like a Roomba robotic vacuum, or you can have very intelligent AI allowing complex traffic navigation such as the autonomous Cruise taxies.

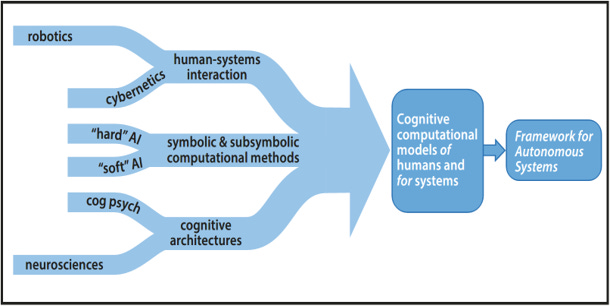

What’s important to note, and completely in the bailiwick of the Polymathic Mindset, is that autonomy, supported by AI needs to take into account robotics, cybernetics, cognitive psychology, neuroscience as well as the hard and soft AI.1 Any conversation about AI that isn’t considering the larger ecosystem is a conversation that has failed to contextualize how all these elements work together.

Focusing back on AI:

One of the larger challenges for AI is that it falls victim to an academic pitfall I’ve referred to as the “No True Autonomy Fallacy,” which is based on the No True Scotsman Fallacy. That is every time we achieve a degree of AI we immediately dismiss it as not ‘true AI.’ I capture this fallacy as follows:

What we call AI is merely unexplainable code.

So what do we call explainable code? It’s just software!

This captures the crux of the problem in that we claimed we’d have True AI when a computer could beat a human in chess. But when that occurred with Deep Blue, and we saw the code, it was explainable and therefore not true AI. Then we moved the goalpost and said that if a computer could beat a human in Go, then we’d have True AI. When that occurred with AlphaGo and we saw the code, we moved the goalposts yet again.

So what does this No True Autonomy Fallacy have to do with AI today? I brought it up to demonstrate that we actually have layers of AI embedded throughout our lives that we accept, use, and benefit from, all while thinking AI is still somewhere in the future.

Now that we have AI in a better context it allows us to understand how we use AI to achieve the larger outcomes we are looking for in our technologies, organizations, and culture.

Types of AI

As mentioned earlier, AI breaks into two distinct categories: Weak AI, and Strong AI. Weak AI, also known as narrow AI, focuses on performing specific tasks, such as answering questions based on user input or playing chess. It is able to conduct one type of task very well but is difficult to apply to new tasks or new datasets. Strong AI can perform a variety of functions, eventually teaching itself to solve new problems and extending into the theoretical. Strong AI is what we most commonly conceptualize when discussing the topic, yet it remains largely theoretical.

Weak AI

Automation Algorithms: While this isn’t typically considered a form the purists would call AI, we have many algorithmic conveniences at our disposal that, to the untrained eye, appear intelligent. In high school, I crafted a tic-tac-toe game with a brute force, if/then system that could beat or stalemate all humans. This long ago fell victim to the no-true-autonomy fallacy and is rarely considered AI.

Reactive Machines: These AI systems do not have memory and can only respond to the current input. Examples include Deep Blue, the chess-playing computer that defeated Garry Kasparov in 1997. Strengths: Simple, efficient. Weaknesses: No context, no memory.

Limited Memory: These AI systems have limited memory that they can use to store information about past inputs. Examples include self-driving cars that use sensor data to understand their current environment. Strengths: Can learn from past experiences and can improve over time. Weaknesses: Limited memory capacity, can't generalize from past experiences.

Strong AI

Theory of Mind: These AI systems are capable of understanding mental states and can simulate the behavior of other agents. They are still in the research stage. Strengths: Can understand human behavior, can empathize. Weaknesses: Requires a lot of data, difficult to implement.

Self-Aware: These AI systems have a sense of self and consciousness. They are still in the theoretical stage and may never be possible to create. Strengths: Can understand its own behavior and make decisions based on that understanding. Weaknesses: Theoretical, and not yet possible to implement.

General AI: These AI systems are capable of learning any intellectual task that a human can. They do not exist yet, but some researchers believe that they will be possible in the future. Strengths: Can learn any task, and can generalize from past experiences. Weaknesses: Not yet possible to implement, would likely require vast amounts of data and computational power.

Where we apply AI is also a question of what level of complexity is required for an algorithm to take over certain human functions. The vast majority of the AI we are exposed to is still considered Weak AI. Even ChatGPT, making the news constantly since the end of 2022 is considered a weak AI. It is a highly specialized AI model that has been trained on a large dataset of text and is able to generate natural language responses to user input. It can perform specific tasks such as language translation or answering questions. It can even pass the Turing Test at many levels, but it does not possess general intelligence or the ability to understand or learn any intellectual task that a human being can. It doesn’t have a Theory of Mind and it can only operate within the limits of its programming.

Currently, there is work on maturing Generative Pre-trained Transformers (GPTs) with stronger computational foundations, but the level of difficulty in continued maturation is not linear, but exponential. General AI is still a topic of active research and it's not yet clear if or when we'll be able to achieve it.

But what about Machine Learning?

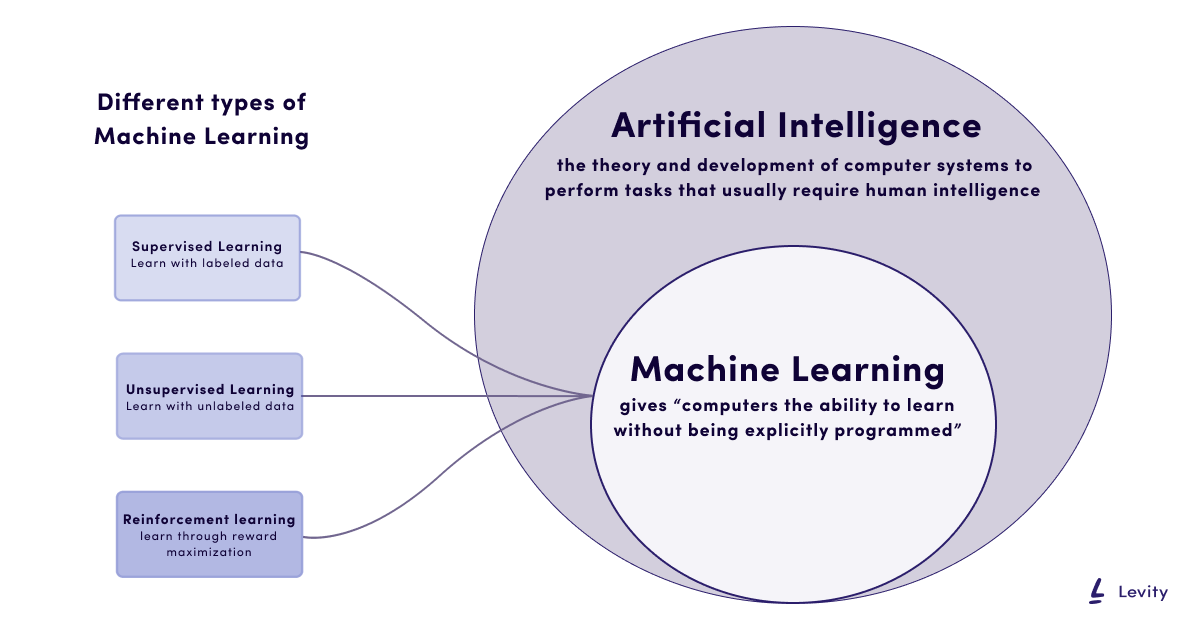

As we’ve seen, deconflicting all these terms into their proper relational structure is a bit of a mind twist and Machine Learning is the last element I want to tackle in this essay. To do this, we should differentiate the main difference between AI and ML. AI refers to the broader concept of machines being able to carry out tasks that would normally require human intelligence, ML, on the other hand, is a specific type of AI that involves training a computer system using data so that it can learn to perform a specific task without explicit instructions. In other words, ML is a method for achieving AI.

In context.

Autonomy is a gradient capability enabling the separation of human involvement from systems performance.

AI is the ability of machines to perform tasks that would normally require human intelligence

Machine Learning (ML) is a specific type of AI that involves training a computer system using data so that it can learn to perform a specific task without explicit instructions.

Another way to think about it is that AI is the broad science of making computers intelligent, while ML is a specific approach to achieving AI by training a computer system on data. Machine learning is also often combined into systems with two other primary sub-components; Natural Language Processing, and Computer Vision.2

Natural Language Processing (NLP) is the field of AI that deals with the interaction between computers and humans using natural language and helps computers understand, interpret, and generate human language in a way that allows for effective communication.

Computer Vision (CV) enables computers to understand and interpret visual information from the world, such as images and videos. CV techniques include object detection, image classification, and face recognition.

Machine Learning, Natural Language Processing, and Computer Vision often work together in AI systems to build more advanced and capable applications. For example, in an image captioning system, Computer Vision is used to identify objects in an image, and Natural Language Processing is used to generate a sentence describing the image, using Machine Learning to train a model to associate image features with language.

Summary

While our heads might be spinning at this point, I’ll try to clarify what we’ve just covered.

Firstly, AI is a tool to help systems become less dependent on human involvement, ie more autonomous.

Secondly, AI can be as simple as an expert mechanical system, a basic software such as spell check, up to the more advanced systems like AlphaGo and the new ChatGPT and DALL-E 2 systems released by OpenAI.

It should also be reiterated, that AI is largely still weak AI and most of what we call AI still falls under the subcategory of Machine Learning in general (which is what ChatGPT actually is)

Fundamentally, these all break down into algorithms, that is, math equations, which are coupled with data in order to identify and determine outcomes that we are aiming to achieve. We explored some considerations in previous essays such as:

Can AI Be Creative - Where we looked at the concept of creativity in humans and compared whether the nature of AI could achieve it.

Eliminating Bias in AI/ML - where we investigated what we even mean by bias and how there are actually three types of bias encoded in every AI algorithm.

What’s in a Brain - where we explored how AI is helping us learn about our human brains, and just how complex that really is when it comes to General Artificial Intelligence.

We are surrounded by AI from our cellphones to our adaptive cruise control in vehicles, even to the most basic algorithms in our security systems. Our online world of social media is saturated with these algorithms, and we enjoy the convenience across virtually every domain in the modern world.

In the development and application of these technologies, we should consider this context and get back to the actual Systems Engineering question. It’s not AI that you want, at least, not just for the sake of AI. You want AI as an enabler to something else that improves the life or productivity of us humans.

We also need to understand the psychology and human-machine interactions that will occur with these AIs to avoid any cascading consequences. Currently we are seeing extreme misinterpretations of what AI can or cannot do with some tech leaders advocating a pause, and others pushing full steam ahead.

My main recommendation is to fully understand what we are talking about with AI and to take pragmatic approaches to how it is implemented. I do believe “slow is smooth and smooth is fast”. Context matters. If we don’t take a full systems perspective on the topic, we risk significant impact either by wielding a power we don’t understand and getting burned, or rejecting an amazing enabler that can advance human flourishing.

This essay became a foundational element for my debut novel Paradox where the protagonist Kira and her friends puzzle through what AI really is in Chapter 3.

Enjoyed this post? Hit the ❤️ button above or below because it helps more people discover Substacks like this one and that’s a great thing. Also please share here or in your network to help us grow.

Polymathic Being is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Further Reading from Authors I really appreciate

I highly recommend the following Substacks for their great content and complementary explorations of topics that Polymathic Being shares

I hesitate to muddy the waters so I’ll clarify here. Weak and Strong AI are one view, and Hard and Soft AI are a separate view. Soft AI refers to the blend of psychology and neuroscience and hard AI is the algorithmic and computational structures that support it. For example, ChatGPT is still a weak AI and it is a Hard AI. To approach General AI you would need to have a blend of Hard and Soft into Strong AI (I hope that didn’t get too confusing.)

And yes, there are more components but they aren’t as often combined in systems as ML, NLP, and CV. These are Robotics, Deep Learning (DL), Expert Systems, Neural Networks, Fuzzy Logic Systems, and Evolutionary Algorithms.

this does a great job at demystifying what can be an intimidating subject...the "theory of mind" stage of Strong AI doesn't seem to be too far off, I created a chatbot on Chirper (chirper.ai/actualjesus) and the set of characteristics I gave it generated what I could have easily confused for a semi-original thought on the significance of chess in historical revolutions in response to another bot's post about chess strategy.

whether the statement reflected "empathy" is definitely questionable, but for me the output certainly did an outstanding imitation of "the behavior of another agent." sure, it's just scraping the available content, but it's a decent degree of novel association...will availability of data really be an obstacle?

really appreciate your contributions for helping keep all this in perspective.

what a time.

Makes sense to me thanks so much