The Enshittification of Everything

Chaos Creates Opportunity

Welcome to Polymathic Being, a place to explore counterintuitive insights across multiple domains. These essays take common topics and explore them from different perspectives and disciplines, to uncover unique insights and solutions.

Today's topic looks at how AI-generated content is polluting our digital ecosystems and the challenges that it creates in trying to understand the world around us. We’ll then explore techniques to reclaim our sanity in this muddied world so we can ensure a human-centric grounding in reality.

Intro

Humans are using AI to create unprecedented amounts of garbage content and flooding it into our digital ecosystems with seeming disregard for the tragedy of the commons as a result, a concept lovingly called enshittification. The examples abound from absurd AI-generated images in a peer-reviewed science journal, (picture 1, below) to Google search results containing images that are not, in fact, baby peacocks (picture 2), to a laundry list of examples that Gary Marcus captures in his article The Imminent Enshittification of the Internet (below pictures)

When AI pollutes, how do we know what to believe and who to listen to? It lowers our trust in institutions like academia, journalism, and engagements on social media. So what is enshittification? It’s a lot like what it sounds like and is defined as:

Enshittification is a pattern in which online products and services decline in quality. Initially, vendors create high-quality offerings to attract users, then they degrade those offerings to better serve business customers, and finally degrade their services to users and business customers to maximize profits for shareholders.

But wait… based on that definition, we have to step back and accept a painful truth: everything was already enshittified and AI is showing us how bad it is. For this essay, let’s take a look at three specific areas: Academia, Social Media, and Online Search.

Academic Enshittification

We don’t have to go far back to find the story of Helen Pluckros, James Lindsay, and Peter Boghossian who wrote 20 fake papers to highlight the absurdity of the academic journal business. They merely used popular jargon and even copied and pasted a chapter from Mein Kampf. While you’d think this would be caught in peer review, it wasn’t and one article even won an award.

It’s a symptom of the larger publish-or-perish challenge many academics face in staying relevant. As a result, hundreds of journals emerged to allow the flood of writers to have some form of outlet to succeed. Long before AI, there were troubling stats around replicability and reproducibility as well as the wholesale retractions of studies, albeit quietly, that Retraction Watch pays attention to.

Then we have to face the recent findings of plagiarism by prominent academics including, ironically, the US Vice President, Kamala Harris’s book on crime. Needless to say, the academic publication world, and non-fiction publishing writ large, have had significant enshittification problems for many years now.

To put this into perspective, the academic publishing house Wiley discontinued 19 scientific journals as a result, not of AI specifically, but longer-term organizational rot over the past decades. As reported by The Register:

In December 2023 Wiley announced it would stop using the Hindawi brand, acquired in 2021, following its decision in May 2023 to shut four of its journals ‘to mitigate against systematic manipulation of the publishing process.’”

AI didn’t cause these issues, they have existed for longer than we’d like to admit.

Social Media Enshittification

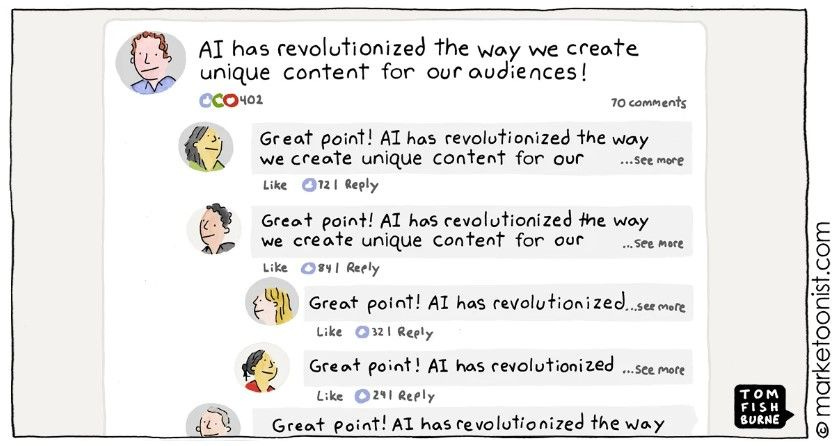

I recently came across a wonderful meme capturing an issue on LinkedIn where influencers are producing AI-generated content and users are using generic AI to reply back. I’ve seen this firsthand on big accounts and I’ve even had this happen on a couple of my own posts.

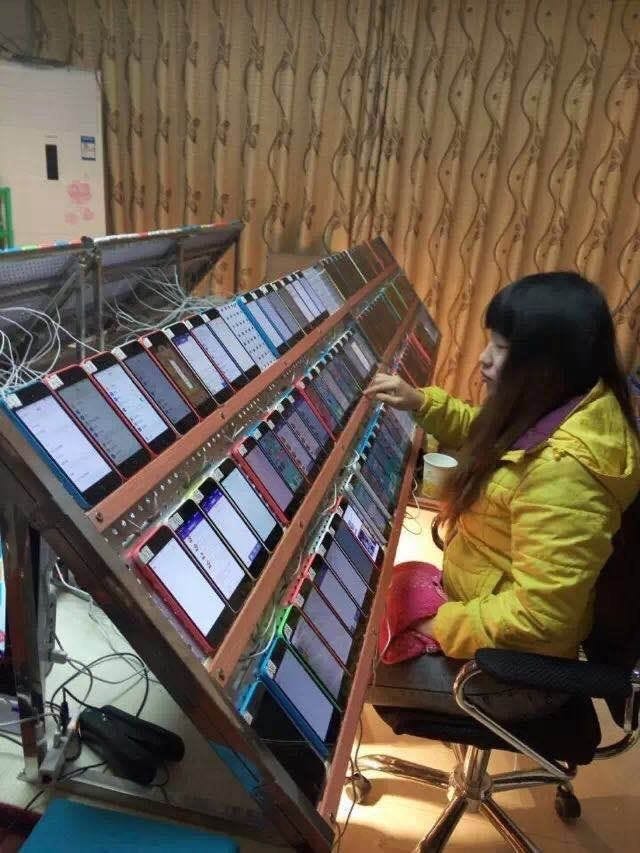

The challenge isn’t that AI is starting to cause bad behaviors. It’s that AI is being used by lazy or exploitative actors that have always been there. In truth, we’ve been dealing with Russian troll farms, and other information operations engagements muddying up the water for a long time now. Add in click farms in Asia and India where they pose as real accounts in order to monetize and sell social media engagements and social media becomes even dirtier. Stack on influencer farms churning out human-generated rubbish and you can see AI isn’t the problem. The internet has been enshittified for quite a while.

Online Search Enshittification

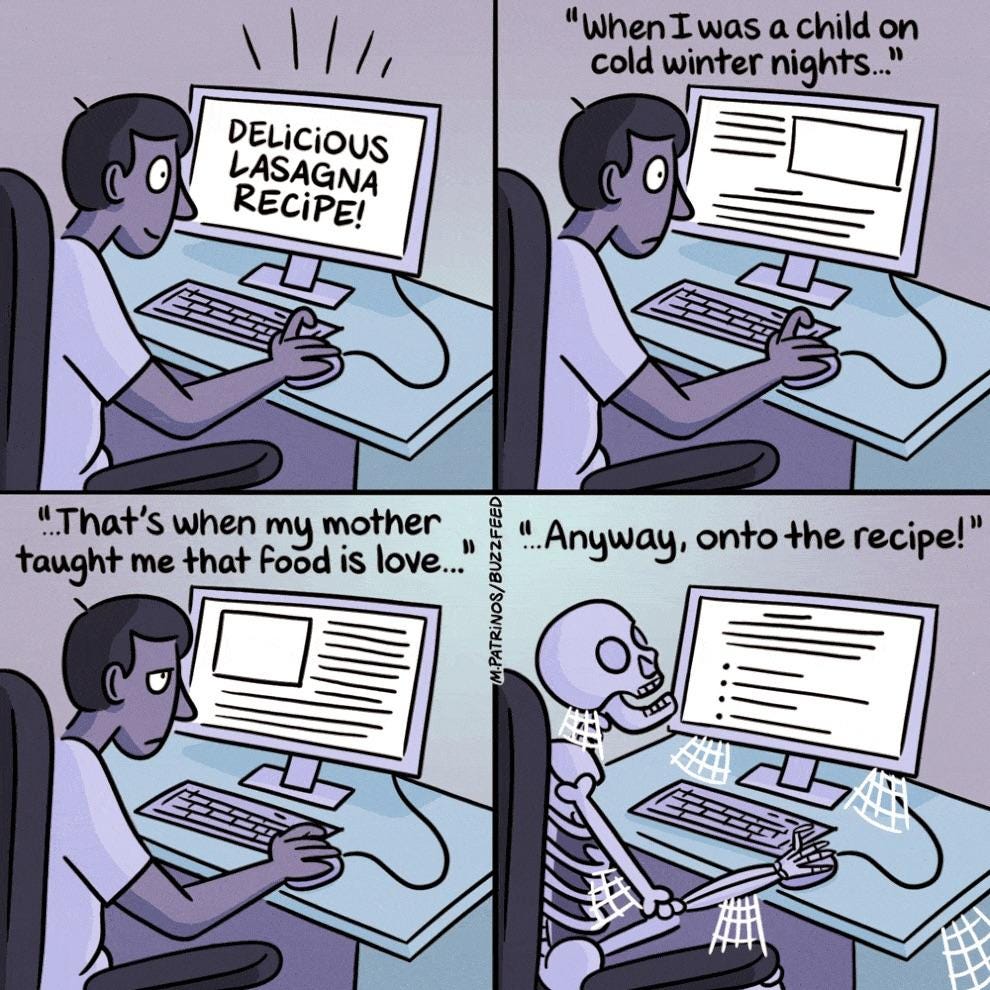

Three words: Search Engine Optimization. That’s why we have recipes with the most rambling stories because you have to clog up the works with unique content to trend. It doesn’t matter that no one cares about how great-uncle Albert once mistook shiitake for magic mushrooms, it’s probably a made-up story designed purely to entice search engines with ‘original’ content. It’s such a trope that there are libraries of memes on the topic.

Online search has been plagued by hacks in a desperate attempt to bring eyeballs onto content. This isn’t new at all, we’ve dealt with pop-up ads, blatant misdirections, clickbait, fake pages, bait and switch, and more since Google first emerged as an amalgamation of multiple search engines. If anything, online search has gotten significantly better in the past 25 years with improvements in AI.

In fact, the enshittification of online search is probably the least impacted by AI because it has, and can, sort out the obvious bad information that has underpinned this domain since its inception. I doubt AI can make it worse.

Cleaning Up the Problem

I started this essay with the goal of highlighting the problems with AI and ended up realizing the misuse of AI in all of these examples is merely a natural symptom of humans manipulating these systems for their own gain. If you go back to the definition of enshittification, you see that it’s a drive for profits that predated the recent explosion of AI within our digital ecosystems. AI is just exposing the man behind the curtain and may end up bringing the whole system down.

AI highlights the existing enshittification of digital spaces but may also wake people out of their stupors when they can no longer ignore the full depth of the problem. On the one hand, those who have polluted our digital commons don’t care about human creation anyway so AI is a siren call to accelerate their tactics. I think this will backfire because I’m not worried about them producing good human content, I anticipate they’ll create such bad content that we demand better.

What could happen is that social media sites finally become serious about blocking these bot farms and algorithm juicers. New sites might emerge where content is certified and validated. Tools like blockchain or other proof of work and encryption technologies may provide confirmation of content.

I don’t think this means banning AI either. As we explored in Augmenting Intelligence, there is a valuable place for AI in our technology stack that can empower human creativity, not replace it. It provides a great opportunity to demonstrate the real value of human-created content while still using valuable technology in a nuanced and ethical way.

Counterintuitively, AI provides new mechanisms to identify polluting materials and behaviors and help clean up the entire mess because the digital commons have been polluted much longer without AI than with it. AI has merely highlighted how bad the behaviors have really been while giving us a chance to use AI to help clean up human behaviors. How’s that for a brain twist?

Finally, for a little historical context for dealing with bad information here’s a quote attributed to Edgar Allan Poe from 18451 and are good words to remember

Believe half of what you see and nothing of what you hear.

Here are two other great resources for managing the enshittification:

Enjoyed this post? Hit the ❤️ button above or below because it helps more people discover Substacks like this one and that’s a great thing. Also please share here or in your network to help us grow.

Polymathic Being is a reader-supported publication. Becoming a paid member keeps these essays open for everyone. Hurry and grab 20% off an annual subscription. That’s $24 a year or $2 a month. It’s just 50¢ an essay and makes a big difference.

Further Reading from Authors I Appreciate

I highly recommend the following Substacks for their great content and complementary explorations of topics that Polymathic Being shares.

Goatfury Writes All-around great daily essays

Never Stop Learning Insightful Life Tips and Tricks

Cyborgs Writing Highly useful insights into using AI for writing

Educating AI Integrating AI into education

Mostly Harmless Ideas Computer Science for Everyone

Attributed to Poe but likely an older saying from the Brittish Royal Navy

We want to maximize our output, and in this case, for the worse. We also look for shortcuts, and AI provides those shortcuts since writing is difficult and time-consuming for most people. Since we have been given a tool for doing it, we have become dependent on it.

My recommendation, even before Gen AI proliferation, was to read old books (25-30+ years old and, if not 100s years old) as much as possible. The reason is that pressure to produce was not there in the past, and also, if a book or concept has survived for so long and is still applicable, then Lindy’s effect has become relevant to it. Most new ideas, concepts, and books have not gone through the test of time, so in most cases, wait to read them and see if they still exist after 15-20 years of publishing. That does not mean I do not read new ideas or books; I do, but only if I can validate the concepts in other ways. I believe in the Royal Society’s motto, 'Nullius in verba,' which means 'take nobody's word for it' unless I can validate it in another way.

I will end with one of my favorite quotes from C. S. Lewis. He observed that every age suffers from its own blindness — failing to recognize perspectives that will be obvious to succeeding generations. To overcome such blindness, he writes, “The only palliative is to keep the clean breeze of the centuries blowing through our minds, and this can be done only by reading old books.”

AI is a tool. Tools are amoral. I would guess from the first tool made, we have used them for good and evil. Will AI bring about our betterment? Our deception? Our destruction? At the individual, community, or species level?