Welcome to Polymathic Being, a place to explore counterintuitive insights across multiple domains. These essays take common topics and investigate them from different perspectives and disciplines to come up with unique insights and solutions.

Today's topic is another collaboration, introducing co-author C.J. Unis, a Space Force systems engineer, aspiring polymath, and complexity wizard, where we discuss Systems thinking. While we think we understand systems most often analysis misses the mark in application in technical and non-technical domains. This essay aims to empower you to apply systems thinking properly to hit the mark and resolve the wicked problems we face.

Introduction to Systems Thinking

Systems thinking has its roots in the fields of systems theory and cybernetics, which emerged in the mid-20th century. Systems theory, developed by scientists such as Ludwig von Bertalanffy, focused on the study of complex systems and their interactions, while cybernetics, developed by Norbert Wiener and others, focused on the study of control and communication in living and artificial systems.

W. Edwards Deming was another key figure in the development of systems thinking, and his "System of Profound Knowledge" (SoPK) is still widely used today. He documented the thinking and practice that led to the transformation of the Japanese manufacturing industry in his 1986 book Out of the Crisis and advocated for an appreciation for a system as a network of interdependent components that work together to accomplish some aim.

Today, systems thinking is used in a wide range of fields, including engineering, management, and the social sciences. It is often used to analyze and improve organizational systems, social systems, and ecosystems. Systems thinking can help identify and solve problems, make better decisions, and improve overall performance by understanding the interdependencies and feedback loops that exist within a system.

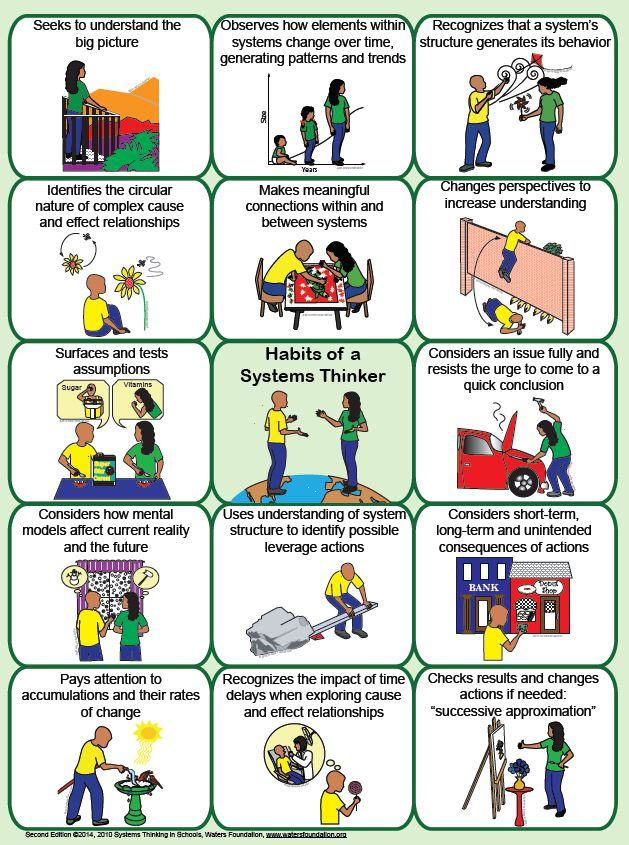

It's important to note that Systems Thinking is not a single method or tool, but rather a mindset, a way of thinking that allows people to understand complex systems and how they work, and to use that understanding to make better decisions and take more effective actions.

What’s in a systems mindset?

“Whenever I run into a problem I can't solve, I always make it bigger. I can never solve it by trying to make it smaller, but if I make it big enough, I can begin to see the outlines of a solution.“

- Gen. Dwight D. Eisenhower

Systems thinking intentionally addresses ways to mature organizations to solve increasingly complex problems. To tackle this level of challenge, the first thing we do is approach the problem with an insatiable curiosity as well as a healthy dose of humility by just accepting that we probably don’t know as much about the overall system as we’d like to think. We like to take the approach by Simon Sinek to “Be the idiot.” In reality, accepting that there is always more to learn about a system is the first step in true system thinking. Couple in that curiosity and we will also ask questions from a diversity of viewpoints to ensure we understand what’s going on.

Another powerful concept that we apply is borrowed from the science fiction book Ender’s Game:

It was impossible to tell, looking at the perfectly square doors, which way had been up. And it didn’t matter. For now Ender had found the orientation that made sense. The enemy’s gate was down. The object of the game was to fall toward the enemy’s home.”

- Ender’s Game (Card, 1985)

For context, the battle school in Ender’s Game has a training lab where the cadets participated in competitive war simulations in zero gravity. Given the entrances to the lab, it was easy to view the battlefield as looking across the Battle Lab. This conceptualization led to established and therefore, predictable, tactics where teams continued to compete but the margins of victory were slight and the nature of the competitions was stagnant. Ender recognized that in zero gravity the concepts of up, down, or across are completely arbitrary and based on terrestrial experience which can be limiting in space. Ender reframed the idea that the enemy’s gate was down and in doing so transformed the very nature of how the battle was conducted.

This forced reframing of the problem space is a perfect complement to curiosity and humility whereby you can play with the problem space from multiple perspectives. Curiosity, humility, and reframing are key enablers to avoiding what we covered previously in Functional Stupidity.

A framework for systems thinking

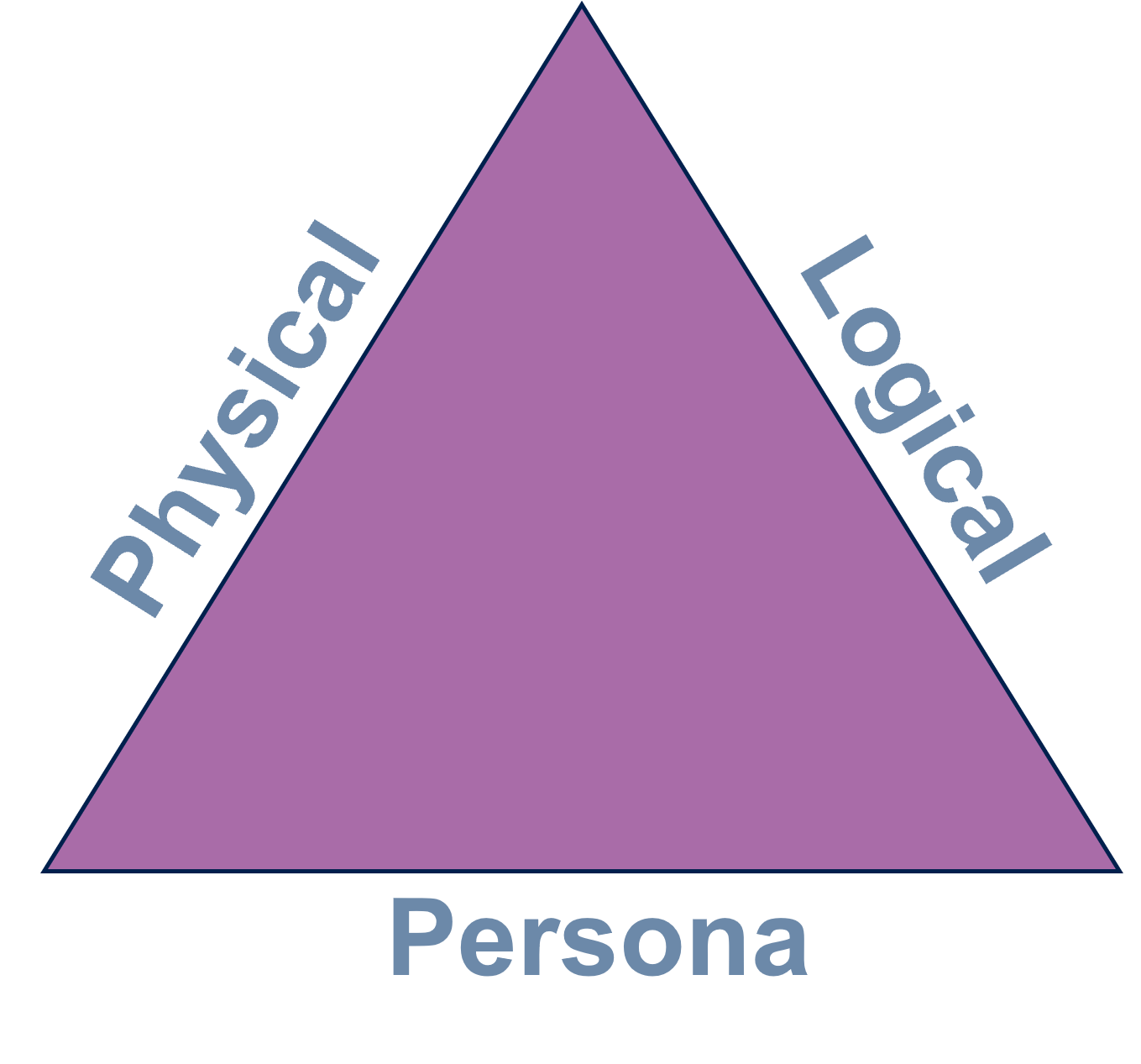

Beyond the mindset, we propose that there are three major perspectives to consider when approaching any problem with initial systems thinking. We borrow these from the field of Cybersecurity where the US Government captures cyber as existing in the Physical, Logical, and Persona dimensions. This actually works out very well for many problems faced by businesses and we stumbled across these three in the previous essay, Maturing Product, Process, and People for Startup Success. (also known as The 3Ps of Business) We’ll define these three layers quickly and then expand into three case studies.

Physical - These are the systems that we understand best as the products of a business. This doesn’t have to be purely physical, but it has to be a thing that is the object of your development. We understand systems thinking decently well here when it comes to classic Systems Engineering and yet it still becomes a tripping point when integrating multiple systems through initiatives like Digital Twin, Composable Systems, or Data Architecture maturation.

Logical - This layer is the glue that holds systems together. These are the processes and tools that we often overlook when considering physical systems. It’s where the balance between process anarchy and process paralysis is so important for leadership to assess across the spectrum. It is at this layer where you find a lot of excuses for why the product architecture isn’t designed well. This layer is subject to Conway’s Law which says a product architecture will mirror the organizational structure.

Persona - This is probably the most overlooked layer in all systems analysis and yet holds the biggest key to success whether it be Ethics, Trust, Human-Machine Symbiosis, avoiding Functional Stupidity, or understanding the Successfully Unsuccessful. A lot of time is spent talking about this layer, but when it comes to the hard conversations about behaviors, organizations normally pivot to focus on the physical and logical in the hope that the behaviors will follow (they almost never do)

These layers are like the three sides of an equilateral triangle, or the three legs of a stool. They cannot be separated and evaluated in isolation as the true analysis is in the fusion of the three and the consideration of how each affects the others.

With this simple introduction, we’ll shift toward a more nuanced application in the following three use cases: Cyber, Autonomy, and Global Food Supply Chain Resiliency.

Case Study 1 - Cyber:

One of the biggest challenges in conducting systems analysis on cyberspace are competing definitions, ubiquitous buzzwords, and the desire to label roles and responsibilities as ‘cyber’ to boost status. Thankfully the Joint Publication 3-12, Cyberspace Operations provides improved clarity by being the first to apply the layered approach we just introduced: “To assist in the planning and execution of [Cyber Operations], cyberspace can be described in terms of three interrelated layers: physical network, logical network, and cyber-persona. Each layer represents a different focus from which [Cyber Operations] may be planned, conducted, and assessed.” And thus was born a simple framework to consider the layers of cyberspace that also impact almost all other systems analysis.

This framework is essential to cybersecurity because 99% of cyber conversations focus on only one or another and rarely all three layers. This leads to the failure of most proposed solutions because the vulnerabilities are most often in the seams between layers where only by viewing the whole, can you see the gaps.

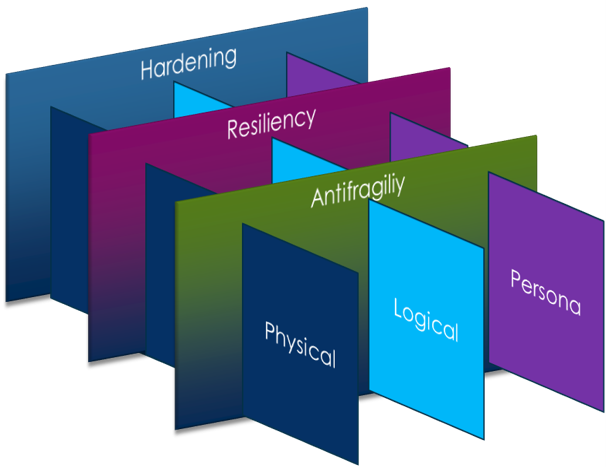

Cybersecurity then must also look across all three layers and, in doing so, we see that classic cybersecurity measures quickly become inadequate. Classic hardening (firewalls, secure facilities, encryption) must be complemented by resiliency (the ability to detect, respond and recover) and it further opens an entirely new domain of antifragility (where systems actually invite and are strengthened by attacks akin to our immune system and vaccines)

Without the consideration of all three layers of cyberspace, we don’t have a foundation to even consider the nuanced application of the layers of cybersecurity. Yet when you blend hardening, resiliency, and antifragility across the Physical, Logical, and Persona layers you open up an incredible opportunity space for layered security measures that are dynamic and adaptable.

Case Study 2 - Autonomy:

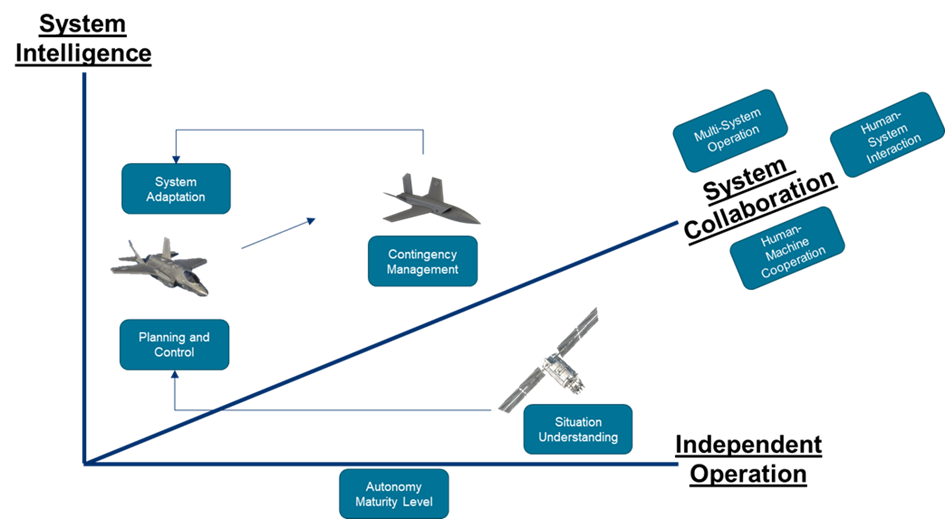

In “Autonomous Horizons – The Way Forward,” the chief scientist of the U.S. Air Force captured the complex relationships of the underlying functions and behaviors to achieve autonomous systems. Their graphic, as captured below, identifies that the solution space for autonomous systems is, by nature, polymathic, and requires knowledge that spans a significant number of subjects and the need to draw on complex bodies of knowledge to solve the problems. The Physical, Logical, and Persona layers map perfectly to this conceptualization where Physical includes Robotics and cybernetics, Logical contains Hard and Soft AI, and Persona holds cognitive psychology and neuroscience.

It is essential to consider the layers in this manner to best understand the complexities of developing autonomous systems. These three layers also apply to the system of systems of autonomy, not just a single system. When we branch into a concept called Manned / Unmanned Teaming (MUM-T) we start to see that these layers apply across systems at scale.

In the image below we can measure autonomy along three axes: System Intelligence (what we can call AI), System Independence (how far separated from the human, or autonomous a system is), and System Collaboration (system of systems interaction including manned teaming MUM-T). With this three-dimensional framework, we can look at how, and where, to blend Physical, Logical, and Persona implications.

As an example, a highly independent, yet low-intelligence sensor system can pass situational understanding to a less independent yet more intelligent battle management system to produce planning and control functions. These high-fidelity mission plans can have a human-in-the-loop to validate the output and can then be sent to a weapon system with a human-on-the-loop that balances the level of intelligence and independence with the opportunity for contingency management. System adaptation can be achieved by closing the loop between the Physical, Logical, and Personal layers of the integrated system. All of this is tied together through system collaboration which measures and analyzes the relationships between systems1

Interlude:

Whew. Let’s take a quick pause and consider what we just unlocked. The failure of most autonomous systems is they are addressing each of these concepts one-by-one and layer by layer. What we just did was introduce the framework of Physical, Logical, and Persona and then use that to contextualize these major disciplines within the Artificial Intelligence (AI) and Autonomy communities and pull it all back together into a holistic framework. Clearly, the systems perspective, while a little intimidating, is also incredibly illuminating and ensures we don’t over-simplify or miss essential elements.

Case Study 3 - Global Food Supply Chain Resiliency:

Last but not least, is our global food supply chain. This one is significantly complex as we manage business performance, demand and supply relations, legislation and regulations, operational technology, and certification with regard to company resilience and food safety. We also have to take into account operations, technology systems, production specifications, quality activities, traceability, and assessment.

COVID-19 provided a great example of the fragility of our supply chains where situations like the commercial consumption of green beans and squash could not adapt to the consumer shift away from restaurants and so entire fields were plowed under in Florida because they could not shift from commercial to the consumer. This also impacted Milk and Eggs and Pigs as well. The major driver for this was the inability of the supply chain to adapt from one market to another quickly enough.

An aspect to consider here from systems thinking is that simple rules can produce complex behaviors. Nature is a great example of this as there is shocking complexity, but as we dig down, we can explain the microscopic, but as we work back, the macroscopic becomes incredibly complex.

This results in the reality that micro-solutions will not fix macro-problems, but macro-solutions with a proper understanding of the underlying elements can solve dozens of micro-issues if applied from a systems perspective. Let’s take the specific example of milk in the COVID-impacted supply chain and analyze it against our framework.

Physical - The main driver for the milk example was a lack of gallon jugs. The commercial sales are keyed toward larger volumes, often five gallons and higher, while consumers are buying at one gallon or less. There wasn’t a shortage of milk, there was an inability to transition milk from one physical system to another.

Logical - Milk distribution was also constrained by procedures, policies, laws, and import/export regulations that mandate certain consumer vs. commercial distribution timelines. Internal logical restrictions resulted in bulk suppliers not being able to sell to end consumers because they had no sales functions that allowed it even in 5-gallon buckets.

Persona - As consumers shifted from eating out to eating at home, this created a twofold issue. First, the demand for consumer-sized milk went up, and second, when the stores started to manage the excess demand by limiting the volume per consumer, it drove increased demand leading to runs on stores for milk even when the supply wasn’t that short.

The result was a complex cascading problem that highlighted the fragility of a variety of changes across the layers. In fact, this problem transcends into a wicked problem because you can’t prepare for all contingencies. This is a great example of a small change in each layer leading to dramatic consequences across all layers which resulted in needed milk being poured onto fields at a rate of 3.7 million gallons a day until the system re-stabilized.

While it isn’t feasible to prepare for ALL possible contingencies, that doesn’t mean don’t look at contingencies. When it comes to managing them, we enter the world of applying resiliency which is the:

"Ability to plan, prepare for, mitigate, and adapt to changing conditions from hazards to enable rapid recovery of physical, social, economic, and ecological infrastructure."2

As complex as that sounds, it simplifies when we apply it across the Physical, Logical, and Persona layers allowing us to address the supply chain threads with an adaptable approach, open to the discussion of failure modes, and we can begin to see the outlines of a solution. This is where existing concepts like Chaos Engineering, or the ASU Applied Futures Lab Threatcasting, allow us to play with, envision, and quantify failure modes to build in resiliency.

Conclusion:

A quote wrongly attributed to Einstein: "If you can't explain it simply, you don't understand it well enough," leads us to a well-intended, but most often misapplied concept. If we simplify enough, we will understand the problem. As we discovered in this essay, micro-solutions, ignoring the systems, don’t solve macro-problems. Furthermore, a quote that Einstein did say, in response to a request for a simple explanation of his Nobel Prize winning physics solution, captures the challenge of systems thinking very well:

“If it could be summarized in a sentence, it wouldn't be worth the prize.”

To avoid over-indexing and saying that everything is hyper-complex and unknowable, we backstop this with Richard Feynman’s counterpoint to complexity. After being asked to explain why spin one-half particles obey Fermi Dirac statistics he boldly stated:

"I'll prepare a freshman lecture on it."

Yet days later, he exhibited that humbleness that we discussed in the introduction and returned saying:

"You know, I couldn't do it. I couldn't reduce it to the freshman level. That means we really don't understand it."

Systems thinking is insatiable curiosity, a heavy dose of humility that we may not know the real problem, coupled with an intentional reframing of the problems. It isn’t a series of tools or a single framework, but a way to conceptualize and reconceptualize problems. One way to see if you have a systems perspective is an adage Michael has developed over the years:

If you think you understand the problem, step back and see if you understand the system.

In a way, our analysis may become more complex but, with that complexity, we can look at the problem from the Physical, Logical, and Persona perspectives to establish context which allows us to simplify it to practical solutions better.

The practice of systems thinking is something that can be learned and improved by exercising analysis against a framework that ensures that you are looking at the maximum number of perspectives that drive the most value. This framework also helps to avoid getting lost in an infinite number of perspectives. Systems thinking is also determining when more information or knowledge is needed, and when it isn’t.

By applying the framework of Physical, Logical, and Persona, and coupling that with the intentional reframing of problems underpinned by curiosity and humility, you can improve the confidence that you have the outline of a problem for which you can start to apply solutions that align the outcomes with your aspirations and goals.

For a more distilled version written after this please refer to Yes, And… So!

Carl ’’C.J.” Unis is currently a systems engineer with the United States Space Force. He is a subject-matter expert in COOP (Continuity of Operations), COOG (Continuity of Government), devolution, infrastructure, community resiliency, alternative energy systems, and supply chain logistics. He also spent over 10 years at Sandia National Laboratories as a systems engineer performing complex systems analysis for the Department of Energy, Department of Defense, and other government agencies.

Mr. Unis has a master’s degree in systems engineering focused on systems complexity and cascading failures of enterprise systems from the Stevens Institute of Technology and is a U.S. Marine Corps veteran.

C.J. and Michael met at the Military Operations Research Society 2022 Annual Symposium where we spent a great deal of time talking about polymathic and systems thinking across a broad spectrum of disciplines that has continued unabated.

Enjoyed this post? Hit the ❤️ button above or below because it helps more people discover Substacks like this one and that’s a great thing. Also please share here or in your network to help us grow.

Polymathic Being is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Woudenberg, M., Waltensperger, M., Shideler, T., & Franke, J. (2020). Systems Engineering of Autonomy: Frameworks for MUM-T Architecture. Defense Systems Information Analysis Center Volume 7 Number 3, 29-41.

Civil Engineering Jan/Feb 2022, "Responding to Code Red", pg 36-45.on page 41 the quote ASCE PS518 -Unified Definitions for Critical Infrastructure Resilience

Publishing on LinkedIn bi-weekly publication, "STO Realities"

Working with Michael and CJ on this project is a privilege.

The actual Einstein quote and the Feynman response are priceless!