Don't Trust AI... Entrust It

A simple shift in concepts can make all the difference

Welcome to Polymathic Being, a place to explore counterintuitive insights across multiple domains. These essays take common topics and investigate them from different perspectives and disciplines to come up with unique insights and solutions.

Today's topic started as a much more technical article that I’ve distilled down to capture one of the single most important insights I’ve come across in the conversations around AI and Autonomous Systems. It’s the topic of trust and we’ll explore why that concept is so fickle and how one simple, yet counterintuitive change of perspective can unlock an incredible method to ascertain ethics, complexity, trust, safety, verification and validation, and much more.

Back in 2020-2021, I had the pleasure of working with several outstanding thinkers while at aerospace giant Lockheed Martin. Troy Shideler, Mike Harasimowicz, Joshua Deiches, and I built on the work of many others1 and brought a first principles approach to autonomous systems that we then matured into a practical framework that we called FIDES - Frameworks for the Integrated Design of Entrusted Systems. This essay distills the original paper for improved readability. (Full draft paper here)2

The Challenge

Many of the issues faced by AI and Autonomous Systems are that we are expected to trust them in order to put them to practical use. Yet this entire conversation is predicated on a misunderstanding of both autonomy, AI, and the delicate and incredibly important concept of trust.

Today we’ll break these topics down and show how, with a simple reframe, we can strip the complexity of advanced technologies and ensure a focus on human advantage while avoiding common pitfalls.

Named after the Roman Goddess of trust and good faith, FIDES creates a holistic approach to analyzing AI and autonomous systems to balance trust, ethics, safety, security, and more.

Baselineing Autonomy

To my long-time readers, you won’t be surprised to hear that a term as overused as Autonomy, as applied to autonomous systems and AI, has a very fractured consensus as to what it actually means. Each time you hear that word you’ll find esoteric and/or hyper-myopic definitions often bordering on the absurd. (not unlike AI) As such, our team was forced to propose a simple definition of autonomy for technology solutions:

Autonomy is a gradient capability enabling the separation of human involvement from systems performance.3

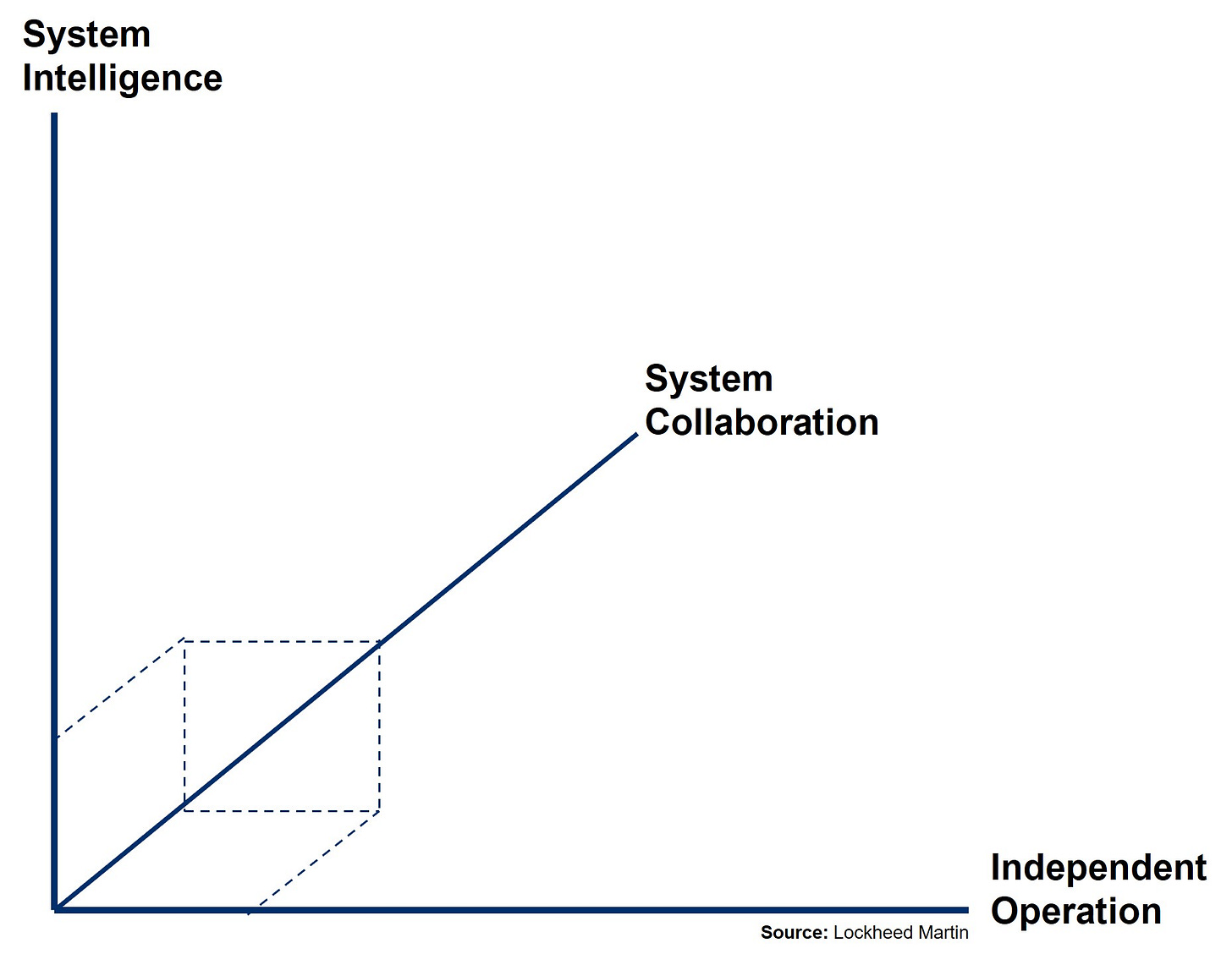

The key is that it’s a gradient measurement from zero to complete autonomy. The larger concept, as visualized in the three dimensions above, integrates AI on a similar gradient. This construct allows you to measure:

Independent Operation (Autonomy): The degree to which a system relies on human interaction measuring Human-in, on, or off-the-loop.

System Intelligence (AI): The level of complexity needed to compose, select, and execute decisions. The scale goes from less complex AI such as expert systems to the most advanced, nondeterministic AI.

System Collaboration: Captures the relationships between systems and humans.

This allows us to map out human and machine systems on the same chart to see how they interact. For example, an Officer in the Army has more training (let’s call that intelligence) as well as more autonomy allocated than a Private just out of Basic Training. Yet these two individuals, with different intelligence and independence, work together on a team through system collaboration.

You can likewise start mapping in AI cognitive agents, cruise missiles, self-driving vehicles, and human actors on the same dimensions together. What this allows is the ability to determine the actual degree of autonomy and intelligence you allow by how much trust you’ll place in a system.

Baselining Trust

If we thought baselining autonomy was hard, our next challenge was with the ‘simple’ word Trust.

Trust, from Old Norse traust (confidence, help, protection), carries the same connotations today as a “firm belief in the reliability, truth, or ability of someone or something.” - Oxford English Dictionary.4

This resonates with us as humans as our relationships are built on trust and we recognize an ability to earn and lose trust. It’s impossible to quantify since trust is a subjective emotion and susceptible to varying environmental conditions and other external stimuli.

The problem is that our very definition of trust requires us to apply human characteristics to non-human actors. The concept of trust very quickly morphs into synonyms such as belief, confidence, expectation, faith, and hope. These are all human-to-human concepts and none of them work well when applied to a mechanical system and are certainly impossible to verify and validate.

Using these terms also tends to anthropomorphize inanimate technology which always leads to misinterpretations and misunderstandings as we’ve found out in previous essays about AI.

This challenge is best captured by the Committee on Autonomy Research for Civil Aviation:

“Trust is not an innate characteristic of a system; it is a perceived characteristic that is established through evidence and experience. To establish trust in [increasingly autonomous] systems, the basis on which humans trust these systems will need to change.”5

So we face a problem where trust is a uniquely human interaction and is merely anthropomorphized to other objects on which it just doesn’t resonate.

Flipping the Script

So far, trying to apply the human concept of Trust to AI and autonomous systems has been a bust. Conversations on this topic are no less messy in technical essays and all of them are unsuccessful in actually taking effective action. Worse, this confusion leads to a lot of people completely misunderstanding AI and autonomy on Social Media. So why did we drag you through such a deep dive into the concept of trust? Because we were looking at the problem completely wrong!

So my team flipped the perspective like how we’ve learned in Systems Thinking.

Fundamentally, trust is not a constant. It’s highly subjective and situation-dependent. For example, I trust my kids, but not to drive a car yet. I trust them with knives, but I won’t allow juggling with them yet. I trust my wife, but not to lift and carry me from a burning building.

By re-framing we found we were using the concept of trust wrong when applied to AI and autonomous systems. The shockingly simple solution was to view the relationship exclusively from the perspective of the human toward the technology, bind it with timing and situationally dependency, and flip the term to Entrust.

Entrust (Verb): “assign[ing] the responsibility for doing something to (someone)” and “put[ting] (something) into someone’s care or protection.”

Simply changing from the noun, trust to the verb, entrust shifted the entire conversation from messy philosophy back to actionable science bound by use cases, concepts of operations, context diagrams, and user profiles.

I don’t trust AI or Autonomous Systems. But I might entrust AI or an Autonomous system with specific tasks bound by control measures. This task-specific context allows us to better identify under what circumstances or limitations we would entrust a technology and this then enables us to begin measuring it.

Measuring Trust

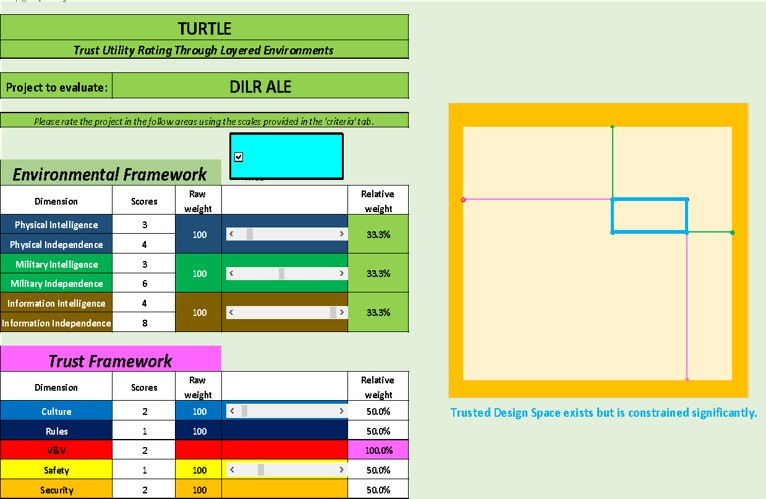

Once we shifted perspectives toward the task and time-specific concept of entrusting it became much easier to quantify whether or when we’d allow a system to take over. We then created a trust framework that broke trust into two main subcomponents of Ethics and Assurance with a further decomposition as follows:

Ethics consists of two primary categories

Rules - The fusion of hard and soft laws.

Hard Laws - Documented legal frameworks - Is it legal?

Soft Laws - Trade group and/or organizational policies like the DoD’s Principles of AI Design from which Lockheed Martin created their own internal AI framework - Will the organization allow it?

Culture - How will culture react either positively or negatively regardless of whether it is legal or allowable? Killer robots strike a different cultural chord than self-driving cars.

Assurance is the ability to certify that a system will perform as expected and includes:

Verification and Validation - These critical design considerations are pulled to the front of the design process to increase confidence in systems performance.

Safety - Will this have a critical-to-safety concern or is it mundane? (ChatGPT vs. a medical device)

Security - Measures how susceptible the system is to external manipulation or loss of control and helps contextualize the severity of a security penetration.

This framework helps establish the limitations of what we’d entrust an AI or autonomous system with by focusing on ethics and assurance, two critical design characteristics that are routinely overlooked.

Putting it all together

The Trust framework of FIDES allowed us to determine the minimum amount of intelligence (AI) and independence (autonomy) that would be required to meet the requirements and then compare the maximum amount we’d allow (or entrust) the system to have. If we didn’t have a large enough entrusted design space we’d have to address the requirements, trust limitations, or design to ensure we could move forward.

Imagine if they did that with the AI tools today! How much less panic might there be right now and how many better design decisions could be made? Instead, we have groups of people blithely running around ignoring the very real consequences while others panic about existential threats based on ethereal concepts that are difficult to pin down.

The trust framework of FIDES was just one part of a more detailed systems engineering design process that, instead of just throwing technology at the wall to see if we can get it to stick, allowed us to define key requirements, environments, and trust considerations that better defined Operational Architectures, Autonomous Behavior Characteristics (ABCs), and Root Autonomous Capabilities (RACs) that allow creating systems that are usable, safe, and entrusted. (more on those can be found published in the Defense Systems Information Analysis Center Journal)

Conclusion

While this topic got a bit more technical than many we cover here, I want to go back and highlight the core nugget we discovered: A simple reframe of a messy concept can create simplicity, elegance, and actionability when before you had feeling, subjectivity, and hand-waving. Instead of forcing non-human systems to behave in human ways, we can just find the specific tasks and limitations that the technology can achieve, and pair it in a more human-centric manner.

Our method allows confidence in executing complex technology integration while everything else, so far, has been leading to the dumpster fires of public opinion, technology failures, and excessive and misplaced hype in AI and self-driving cars this past year.

It’s a powerful case study of the power of reframing and demonstrates how we can take hyper-complex systems like AI and Autonomy and break them down intelligently so that simple solutions can have incredible benefits.

The trust and environmental calculator is a real thing and we welcome anyone who’d like to collaborate to help us bring it to the next level. (Full draft paper here) LaSalle Browne has already begun building from this in his well-written Medium article demonstrating the power of shifting from trusting a system to Entrusting it with specific tasks and for specific times.

Enjoyed this post? Hit the ❤️button above or below because it helps more people discover Substacks like this one and that’s a great thing. Also please share here or in your network to help us grow.

Polymathic Being is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Further Reading from Authors I really appreciate

I highly recommend the following Substacks for their great content and complementary explorations of topics that Polymathic Being shares.

Goatfury Writes All-around great daily essays

Never Stop Learning Insightful Life Tips and Tricks

Cyborgs Writing Highly useful insights into using AI for writing

One Useful Thing Practical AI

Educating AI Integrating AI into education

Mostly Harmless Ideas Computer Science for Everyone

Additional thanks to Dr. Mark Waltensperger, Jerry Franke, Patrick Howley, and Erica Cruz for providing insights and inspiration in maturing these frameworks.

Paper and Images were released as Unrestricted Content from Lockheed Martin under PIRA CET202109005, ORL201912005, CET202005003, and others.

Many definitions fall into the cognitive and moral concepts of human autonomy without recognizing that humans aren’t fully or completely autonomous as we are bound by biology, society, and governmental rules.

Oxford University Press. Oxford English Dictionary. Oxford: Oxford University Press; 2021

Committee on Autonomy Research for Civil Aviation. Autonomy Research for Civil Aviation: Toward a New Era of Flight. Washington, DC: National Academies Press; 2014.

Increasingly front and center of every AI conversations. Can you handle the truth?

Nice. I am going to steal "don't trust- entrust", since this very reasonably encapsulates my own view. Well done!