AI Computes; Humans Think

Understanding Humanity's Superpower

Welcome to Polymathic Being, a place to explore counterintuitive insights across multiple domains. These essays take common topics and investigate them from different perspectives and disciplines to come up with unique insights and solutions.

Today's topic unlocks humanity’s superpower; The ability to critically think, ask questions, and form, unform, and reform ideas. It’s a key aspect that AI currently can’t do and one that underpins Polymathic thinking and feeds into learning, unlearning, and relearning. Once we understand this ability, we can properly contextualize AI into the useful, but limited assistant that it is.

Introduction

AI computes, humans think. When humans think, they ask questions because they are curious. AI only works with what they have and asks for no more.

This is an important distinction that often goes overlooked. If you ask ChatGPT a question, it will respond and churn out an answer. To get it to ask for more information, you have to tell it to ask for more information which then becomes a separate sequence of activities. It’s not actually asking you for more information but more of a ‘call and response.’ It’s not curious and it’s never confused.

As Birgitte Rasine discusses in the essay Hallucination Nation - Part I - what we anthropomorphize as ‘hallucinations’ are actually errors. Humans hallucinate and AI has errors in computation. While this might seem trivial at first, it highlights yet another way in which How we think about AI can actually manifest our interpretation of AI.

Now that may feel a little philosophical but it’s important because when we talked about the trifecta of Critical Thinking previously, we discovered there are three things to the concept:

Knowledge Management

Logical Structuring

Application of Critique

Number 3 is related to Critical and the first two are related to Thinking. Humans do all three when they think critically and, right now, AI can only do #2.

AI is fed structured knowledge and then computes a logical response in the form of predictive text or image completion, but it really doesn’t have a way to critique it. I’ll also add that even #2 is limited because it can’t form, unform, and reform logic to play with the ideas. If you ask the exact same question again, it will often give you a totally different answer.

Some might contest that Microsoft Bing with ChatGPT can search for information it has not yet been fed, but it does so under very structured methods similar to Bing Search without ChatGPT and how Google does it. However, now it will work to summarize a series of results for the user.

Humans think and AIs compute.

In application

Once we understand the capabilities and limitations of AI and, I hope by now, we realize that anthropomorphizing is something we really should avoid, we can then better see how to best put AI to use in a way that really helps us move humanity forward.

To start with, as Ethan Mollick captures in the essay On-Boarding your AI intern, we can use the AI to handle routine tasks and data aggregation. I like to use it to summarize articles to see if I’ve missed something. I’ve also used it to poke apart arguments, provide counterarguments, and uncover sources that I hadn’t thought of.

Instead of beating your head against a wall trying to hash out metrics, key performance indicators (KPIs), or critical data sources for a technical brief, you can ask ChatGPT to provide a table of options that you can pick from to flesh out the idea.

For example, here is a list of KPIs I put together for a client to just get the conversation moving when they hit a roadblock of what they really wanted. I worked with ChatGPT to point it in the right direction, then I culled and redirected with that knowledge management we discussed, applied my own logical structuring and restructuring, and critiqued the result.

It was enough to get my client up and running when they’d been stuck before.

Mark Palmer hits on a similar topic with The Joy of Generative AI Cocreation where he reminds us that:

When cameras were invented in the 1800s, painters revolted. They argued that photography wasn't art. Eventually, attitudes changed, and artists emerged, from Henri Cartier-Bresson to Richard Avedon to Diane Arbus to Annie Leibovitz, each armed with a unique point of view.

It’s a great point and one to remember as many artists are currently revolting in a very similar manner as the painters 150 years ago. Fundamentally they aren’t differentiating between thinking and computing. Critical Thinking is what makes humans uniquely positioned to not be threatened by AI, but to use it like we have so many other technologies in human advancement.

Summary

There are risks to AI just like there are risks from all levels of technology. When OpenAI CEO Sam Altman talks of extinction-level threats from AI I have to chuckle. He’s the one who created ChatGPT. The only thing he’s worried about right now is the extinction of his business from the competition. Putting a pause on development puts a market lock on ChatGPT and allows him to rake in money.

Maybe I’m being cynical there, but I’m an optimist when it comes to what I feel AI can do, when properly understood, as an Intern or a Cocreator in unlocking so much more potential for us.

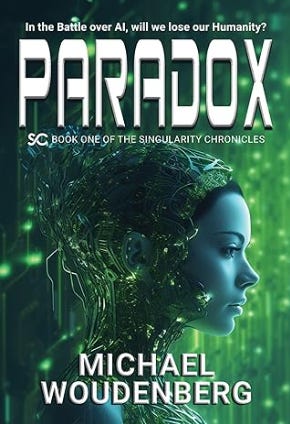

In fact, a year ago I wouldn’t have been able to come up with the cover of my book, and my friend Matt wouldn’t have been able to polish it, without some serious investment, frustration, and time hiring an expensive designer. Now we can use our own vision and AI to help make it a reality.

As long as we remember that our superpower is in Critical Thinking and that involves knowledge management, structuring logic, and applying critique, we can recognize that this is something AI cannot do right now. I think many people are worried because they too cannot critically think but that’s not a limitation of being human. Once we put AI in the right context, we can see how it can be a great co-creator and how we can use it to augment our critical thinking to advance human capability.

This essay became a foundational element for my debut novel Paradox where the protagonist Kira and her friends puzzle through what AI really is in Chapter 3.

Enjoyed this post? Hit the ❤️ button above or below because it helps more people discover Substacks like this one and that’s a great thing. Also please share here or in your network to help us grow.

Polymathic Being is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Further Reading from Authors I really appreciate

I highly recommend the following Substacks for their great content and complementary explorations of topics that Polymathic Being shares.

Looking for other great newsletters and blogs? Try The Sample

Every morning, The Sample sends you an article from a blog or newsletter that matches up with your interests. When you get one you like, you can subscribe to the writer with one click. Sign up here.

While AI can compute and provide responses based on existing knowledge, it lacks the depth of understanding and the capacity for critical thinking that humans possess. AI models don't experience genuine curiosity or confusion and can't ask probing questions to seek a deeper understanding. This limitation means that AI's responses may lack nuance or context, and they might not always consider the ethical or moral implications of their answers.

When integrating technology, including AI, into our lives, it is of utmost importance to approach it ethically and responsibly. This involves several key considerations. First, we need to ensure that the data and information fed to AI models are diverse, and representative of the real world. Biases present in the data can be inadvertently amplified by AI, leading to unfair outcomes. Careful data curation and regular evaluation are essential to mitigate these biases. Furthermore, ongoing monitoring and evaluation are necessary to identify and rectify any unintended consequences or emergent biases that may arise from AI systems. Regular audits and assessments of AI algorithms and their impact on individuals and society can help address emerging issues and refine the technology.

Secondly, transparency and explainability are crucial. AI algorithms can be highly complex, making it difficult for users to understand how and why certain decisions are reached. It is vital for developers and organizations to make efforts to explain AI's decision-making processes in a clear and understandable manner, allowing for accountability and avoiding the creation of "black box" systems.

However, I have a question , Can the hallucinations refine and one day indicate an evolution of intelligence? As AI gets access to different knowledge bases and use algorithms to employ logic and decision making to map out an approximate response to the prompt provided? Is our realignment to ensure that we are the focus of the systemic evolution and limit the agency of AI to preserve itself to act on it own ability to think ? Does it come up with a logic that aligns with reality similar to ours, surpassing our understanding or is the realignment limiting the response. AI is aligned with the latest interpretation of the discipline that it is being integrated into, and emergent knowledge has to be validated by human critical thinking always?

As usual, Michael from Polymathic Being nails it: AI computes, humans think.